Neural Architecture Search of efficient Recurrent Networks for TinyML

- Subject:Neural Architecture Search, TinyML, Recurrent Neural Networks

- Type:Masterarbeit

- Tutor:

Neural Architecture Search of efficient Recurrent Networks for TinyML

Context

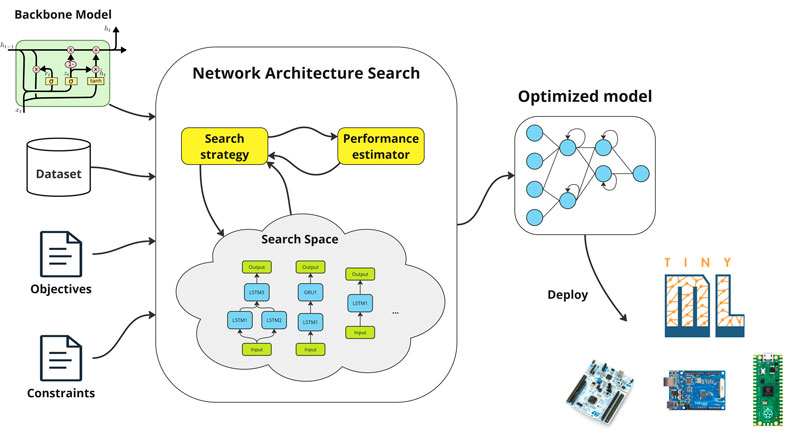

TinyML is a branch of machine learning that focuses on creating models small enough to operate on low-power, resource-limited devices like microcontrollers and edge devices. TinyML enables wide range of applications requiring the real-time response and energy efficiency such as always-on visual and audio wake word detection, predictive maintenance for industrial machinery, inertial navigation, on-device handwriting capture and recognition. However, executing neural networks on tiny devices is a very challenging task due to the extremely limited computational resources, available memory and energy budget. Recurrent neural networks are a typical choice for the on-device time-series processing. Although most of the state-of-the-art neural network architectures are feed-forward, recurrent neural networks typically have a smaller RAM footprint than convolutional networks, making them a perfect fit for tiny devices. However, even recurrent architectures require additional effort to adapt them to specific hardware, involving the hyperparameter and network architecture optimization. This work aims to explore the methodology of the hardware-aware Neural Architecture Search (NAS) for recurrent neural networks.

Goals

In this work the state of the art in hardware-aware NAS for time series regression and classification networks will be researched. Based on the analysis of the existing NAS approaches for the convolutional neural networks the methodology for hardware-aware search of recurrent neural networks will be developed. Then, the architecture search will be performed on a selection of synthetic and real world application datasets. Finally, the discovered architectures will be deployed and evaluated on the microcontroller-based platforms.

Requirements

- Experience with Deep Learning and one of the DL Frameworks like PyTorch or Keras/TensorFlow

- Experience with Python and C++

- Motivation and independent working style